The Hardware build of the PI4 5 node cluster has been completed. I used 5 RPI 4 4GB SBC board , 5 120GB ADATA M.2 SSD, 5 64GB SD cards for boot and vanilla image, PicoCluster 8 port 1GB Switch, 1 Picocluster PDU, 2 60mm fans, 5 Pimoroni Blinkt RGB Led Strips. The Lucite 5 Node Cube, PDU, and 8 port switch were purchased from PicoCluster. The Package is called the Pico 5H

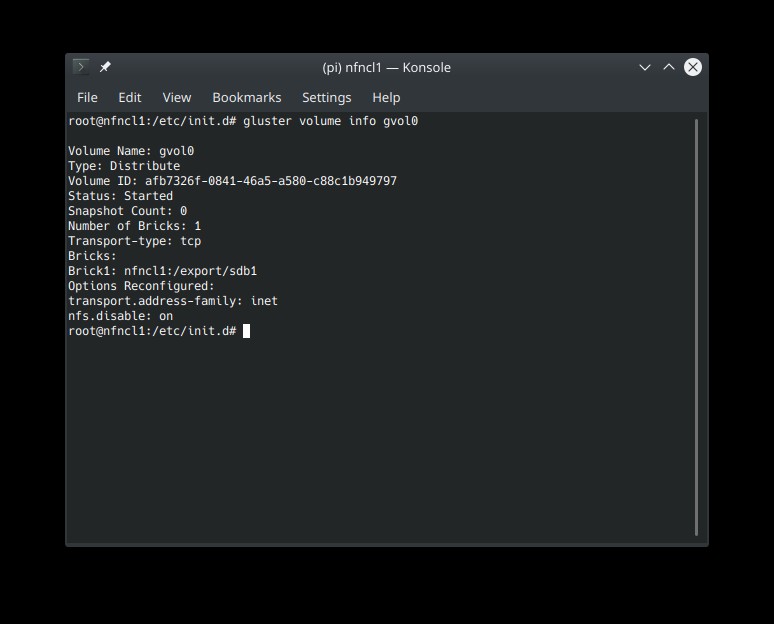

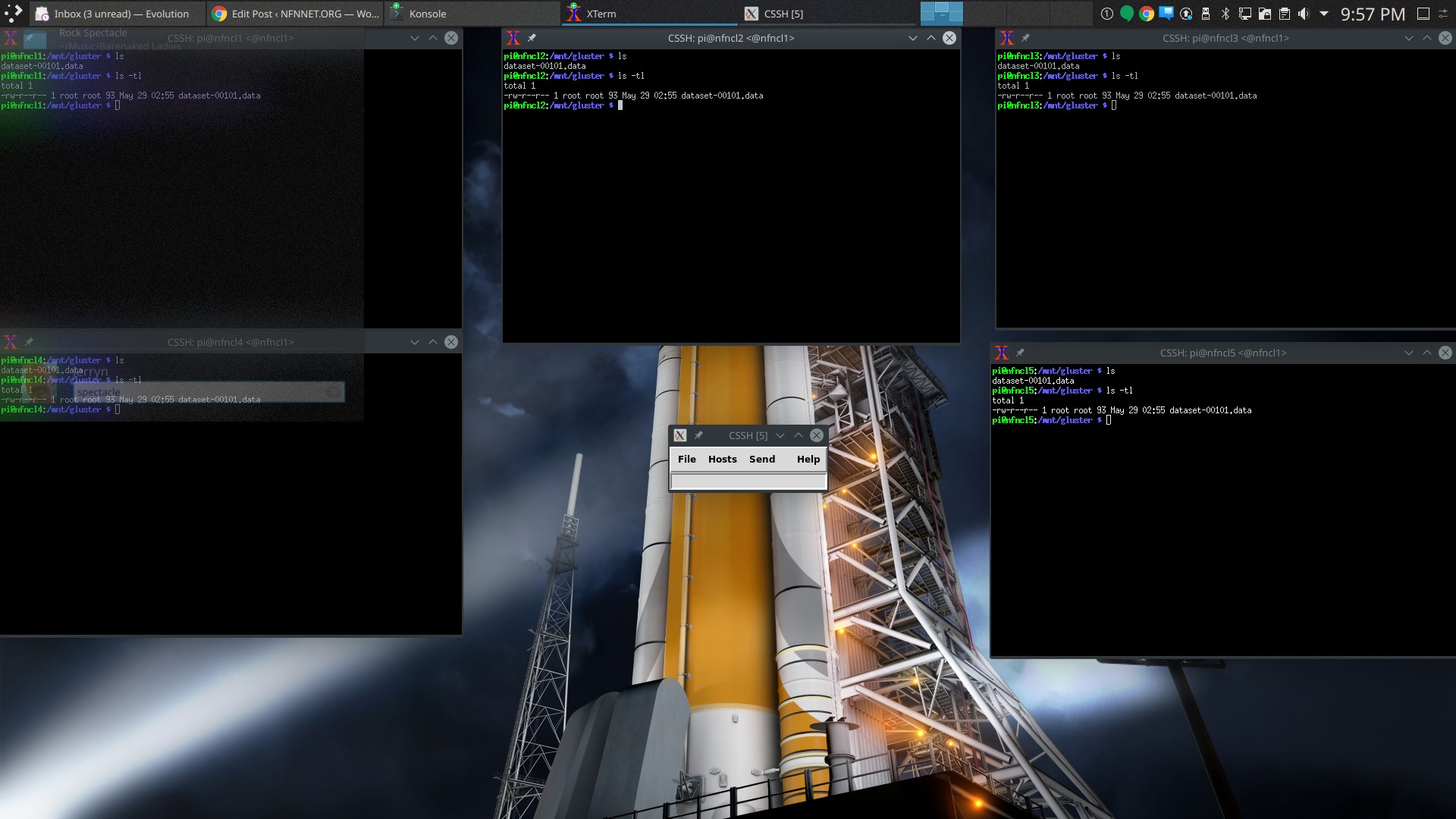

Raspbian Buster Lite has been installed on the SD for boot and a Vanilla OS root file system. When the Vanilla image boots the master node is ready to login and connect to all the nodes with clusterssh. At this point the Cluster is ready to be configured for an application. USB 3.0 Attached drives on each node is partitioned, formatted and the SD Vanilla image is rsynced over to the USB 3.0 attached SSD local storage, cmdline.txt and the root fs entry in /etc/fstab is updated to use the SSD instead of the SD Card. After rebooting the cluster the system is ready to be configured. Currently I am writing Ansible Playbooks to automate the installation of OpenMPI, Fabric, Glances, Tensorflow and some modeling tools. Once complete I’ll write another Ansible playbook to restore the system to the Vanilla image. Basically the Playbook will update cmdline.txt and /etc/fstab to use the root fs of the SD card. When the Restore to Vanilla Playbook is complete I will write another Playbook to configure the cluster for Kubernetes. This process is ideal for refreshing the cluster to a new clean system before you configure for a new Lab or application.